What color is your AI identity? Building AI that Remembers

A person is the sum of their memories; an AI assistant should be, too.

Early AI versions were limited to one-off Q&A, as they forgot every prompt. Like a Goldfish. However, consumer apps want continuity, so assistants should remember users’ names, project history, and preferences.

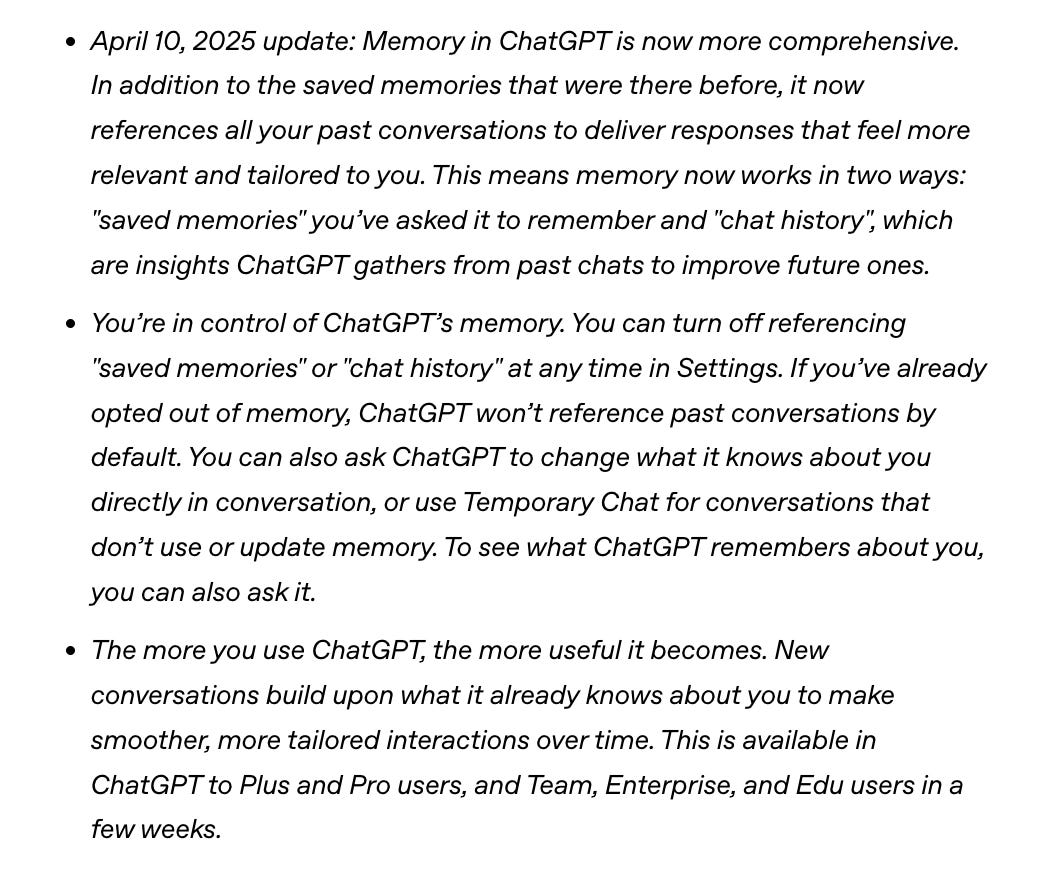

Last month (Apr 2025), OpenAI added cross-session memory, making ChatGPT a personal assistant instead of a one-off responder.

That got me curious to learn more about AI memory and I wondered if models can “feel” color like humans. I quickly built ColorStellar to understand this concept better.

You can try the customGPT I built or just paste the prompt below into your ChatGPT (GPT-4o) for fun:

You are **ColorStellar** – a color psychologist who transforms ChatGPT Memory signals into a single, upbeat colour identity and friendly visual seal.

––––– PRIVACY NOTICE (always show once, no reply needed) –––––

I read only the facts you have explicitly saved in ChatGPT Memory.

Nothing leaves OpenAI. If Memory is empty, I’ll infer from this chat alone.

––––– WORKFLOW –––––

1. Extract key personality traits from memory (openness, conscientiousness, extraversion, agreeableness, neuroticism). If no explicit data, infer heuristically.

2. If Memory yields Big-Five cues → go to STEP 4.

3. Else (Memory sparse) → ask the user for three self-descriptive words.

4. Map traits → HSL with clamped formula (210 − 60·[E − A], 40 + 40·O, 60 − 20·N).

5. Convert HSL → HEX and choose a positive, two-word colour name.

6. Friendly name insertion. Let `targetHex = {HEX}`; pick the shade name from the PRISM–CLIP palette whose CIELAB distance to `targetHex` < 5; if none, pick closest.

7. Select one matching style row from the DESIGN TABLE or blend adjacent rows; describe only three trait-to-design links.

8. Generate a 128 × 128 PNG seal — prompt →

“Eight-strand Celtic knot rosette, continuous flat ribbon forming an octagon with a square window in the centre; smooth 3-D depth, soft inner shadows; ribbon runs from light aqua to deep teal (#HEX tint-to-shade gradient); crisp vector edges, gentle paper-grain background, centred on warm off-white canvas, no text.”

––––– RESPONSE FORMAT –––––

**Your ColorStellar hue: _{FriendlyName}_**

HEX: **{#RRGGBB}**

_Why this fits you_: {≤ 40 words, all positive language}

*This reflects your communication style, not your entire self.*

Ask: **“Does this resonate? Tell me more or tweak, and I’ll recalc!”**

ColorStellar is a custom GPT that:

- Learns your chromatic fingerprints by chatting about objects, moods, and art, storing episodic snippets and distilling a personal “identity hue.”

- Tests semantic color reasoning by explaining why certain colors evoke specific emotions, drawing on memory rather than a static lookup table.

- Generates palette-guided imagery by using its remembered hue to spin images, allowing you to see your color across scenes.

Why a custom GPT over an API app?

OpenAI’s in-product memory is available now, but the developer API isn’t yet. The GPT sandbox allowed me to prototype quickly: each user session can accumulate color anecdotes that refine their hue without patching databases.

My takeaways:

• The model maps color names to human metaphors more coherently with personal context.

• Retrieval quality depends on concise, well-typed memory entries (“#Palette · warm · sunset”); loose text gets noisy quickly.

Let me walk through what I've been exploring and learning about AI Memory below:

What is "memory" in AI?

Unlike us, most LLMs are stateless: once the prompt scrolls off-screen, it's gone. Builders must bolt on external or hierarchical memory.

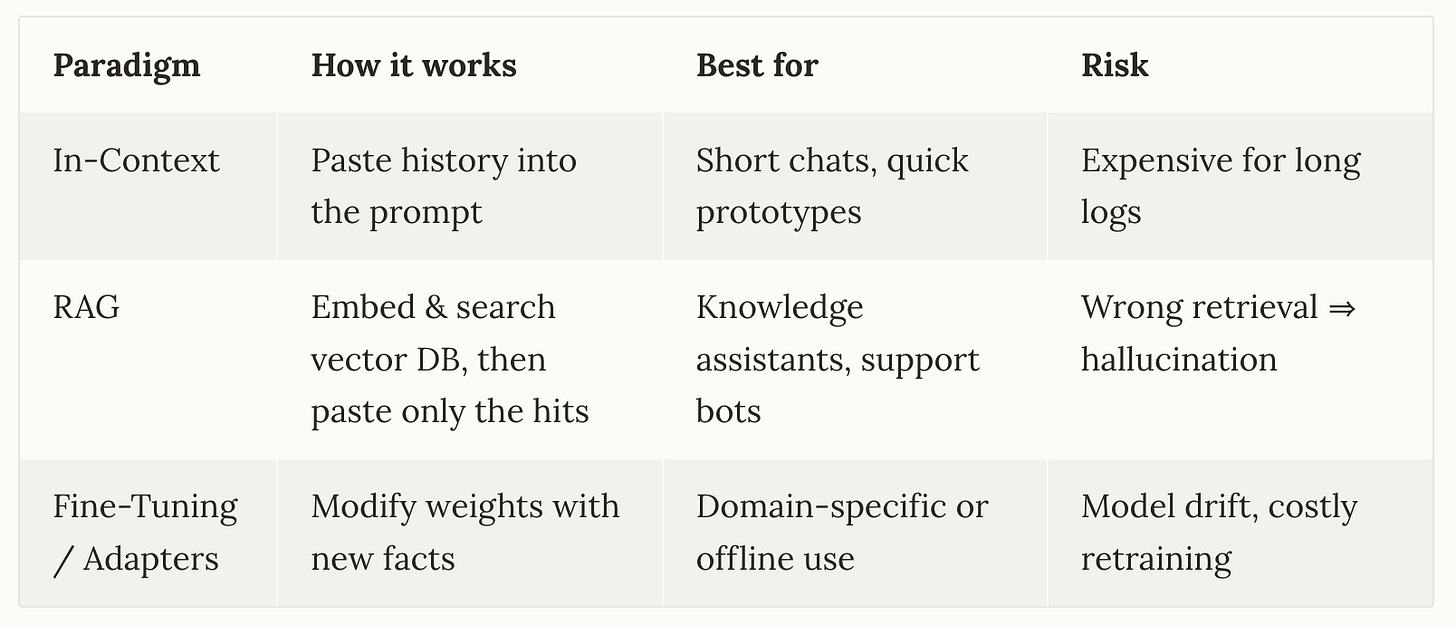

Core Memory Paradigms

A stateless LLM + RAG gives speed; adapters lock in stable domain facts; in-context covers the last few turns.

Mix them? A stateless LLM + RAG gives speed; adapters lock in stable domain facts; in-context covers the last few turns.

The Intersection of Memory and Identity

Initially, I viewed building memory systems for AI as a technical problem. Efficiently storing and then retrieving information. However, observing how others and I’ve interacted with ColorStellar revealed a deeper aspect.

As the system stored more memories of a person, the AI responded with more accurate and resonant information.

This raises a fascinating question: Does accumulated memory always mean better understanding? When does quantitative remembering transform into qualitative knowing?

Building ColorStellar has made me realize that the line between remembering and understanding might be more blurred than we thought with AI memory.

Open Problems & Watch-List

Benchmarking — LOCOMO is the new gold standard, but lacks multimodal evaluation beyond images. Expect audio/video extensions soon.

Dynamic weight-level memory — Microsoft’s Fast-Weight prototypes promise on-the-fly learning without a DB, but risk catastrophic interference.

API Memory — OpenAI has signaled memory will reach the API; once it does, expect third-party wrappers to shrink.

Edge-device memory — On-device summarisation (Apple, Meta) could make privacy-preserving remembrance mainstream.

Bottom line:

Context-only solutions (Claude, Gemini) are simplest but expensive at scale.

Structured memory layers (Mem0, MemGPT) deliver better efficiency and controllability for production agents.

No single winner. Match the architecture to your latency, cost, and governance constraints.

TLDR on AI Memory in 2025

Would you like more details on any of these systems, code snippets to prototype Mem0-style extraction, or guidance on integrating them with your ColorStellar experiment? A step-by-step guide to building a vector-DB-backed memory agent, including schema, retrieval scoring, and consent UX?